Hand Gestures as the Remote: M5stack-Basic integrated robotic arm

-

Introduction

I am a freelancer specializing in machine learning and robotics technology. My passion began during a course in artificial intelligence in college, which inspired me to explore new methods of human-machine interaction. In particular, for the operation of robotic arms, I have always wanted to simplify their complexity to make them more intuitive and easier to use.

The inspiration for this project stems from my love for innovative technology and the pursuit of improving the ways humans interact with machines. My goal is to develop a gesture-based robotic arm control system that allows non-professionals to operate it with ease. For this purpose, I chose Google's MediaPipe library for gesture recognition and used mycobot 320 m5 as the experimental platform.Technical Overview

Google MediaPipe

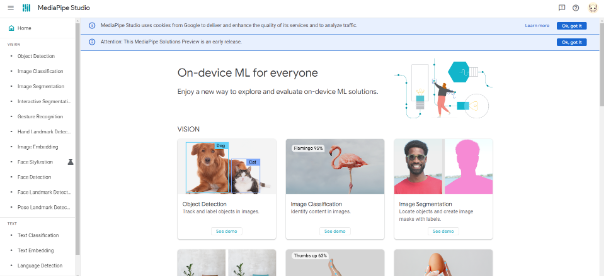

MediaPipe is an open-source cross-platform framework developed by Google, specifically designed for building various perception pipelines. This framework offers a wealth of tools and pre-built modules, enabling developers to easily build and deploy complex machine learning models and algorithms, especially in the field of image and video analysis.

A notable feature of MediaPipe is its support for real-time gesture and facial recognition. It can efficiently process video streams and identify and track human gestures and facial features in real-time. This capability makes it incredibly useful in interactive applications, augmented reality (AR), virtual reality (VR), and robotics.You can try the gesture recognition online feature without needing to install anything.

MediaPipe Studio

Its easy-to-use API and comprehensive documentation make it easier to integrate this framework, making it very suitable for use in the fields of machine learning and computer vision.pymycobot

pymycobot is a Python API for serial communication and control of the mycobot robotic arm. This library is designed to facilitate developers in controlling the mycobot robotic arm using the Python language. It offers a series of functions and commands that allow users to control the movements and behavior of the robotic arm through programming. For example, users can use the library to get the angles of the robotic arm, send angle commands to control the movement of the arm, or get and send the coordinates of the robotic arm.

The only standard for using this library is that it must be used with the mycobot series of robotic arms, which are specifically adapted for the mycobot.Product Introduction

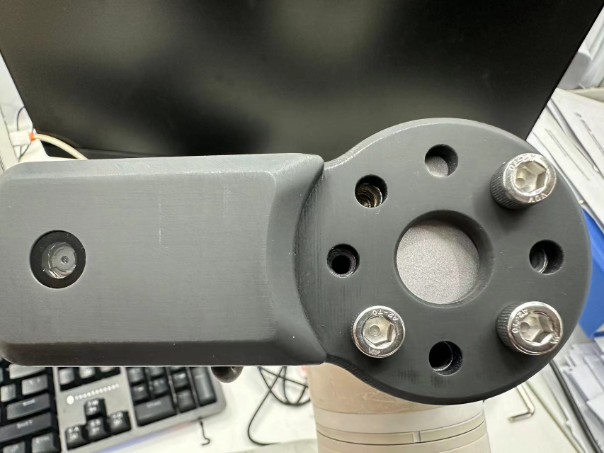

myCobot 320 M5stack

The myCobot 320 M5 is a six-axis collaborative robotic arm developed by Elephant Robotics for users. It has a working radius of 350mm and a maximum load capacity of 1000g. The robotic arm is suitable for an open ROS simulation development environment and includes forward and inverse kinematics algorithms. It supports multiple programming languages, including Python, C++, Arduino, C#, and JavaScript, and is compatible with Android, Windows, Mac OSX, and Linux platforms. The versatility of the myCobot 320 M5 makes it suitable for a variety of development and integration applications.

2D Camera

A 2D camera that can be mounted on the end of the mycobot320, communicating via a USB data cable. It can present the view seen from the end of the robotic arm.

Development Process

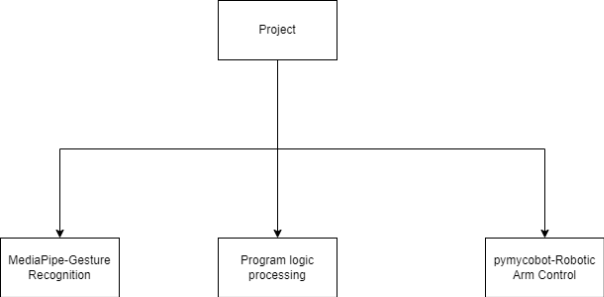

Project Architecture

I have divided this project primarily into three functional modules:

Gesture Recognition: This module is mainly used for the recognition of gestures, capable of returning information about what the gesture is, such as a thumbs-up, etc.

Robotic Arm Control: This main function is used for setting the motion control of the robotic arm, including coordinate control, angle control, and so on.

Program Logic: This is used to handle the logic of the program's operation, setting confirmation times for gestures, resetting recognition times, etc. These will be detailed further in subsequent sections.

Compilation Environment

Operating System: Windows 11

Programming Language: Python 3.9+

Libraries:opencv,pymycobot,mediapipe,timeGesture Recognition

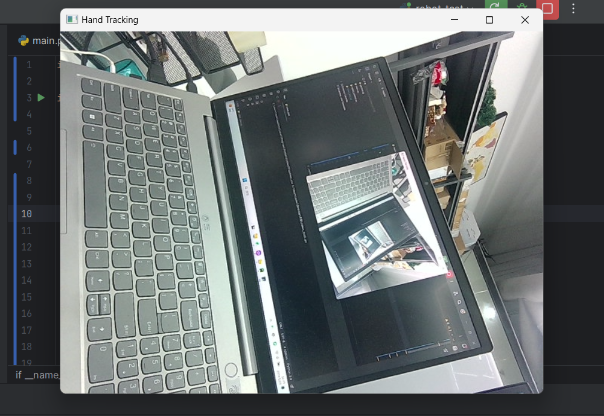

To perform gesture recognition, we first need to obtain a camera image. Here, we use the OpenCV library to access the camera feed.

import cv2 # Get camera stream, default camera - 0, external cameras in order - 1, 2, 3 cap = cv2.VideoCapture(1) # Continuously acquire camera footage while cap.isOpened(): #Get the current image screen ret, frame = cap.read() # Convert BGR image to RGB rgb_frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB) # display screen on computer cv2.imshow('gesture control',frame) # Press the 'q' key to exit to avoid an infinite loop if cv2.waitKey(1) & 0xFF == ord('q'): break

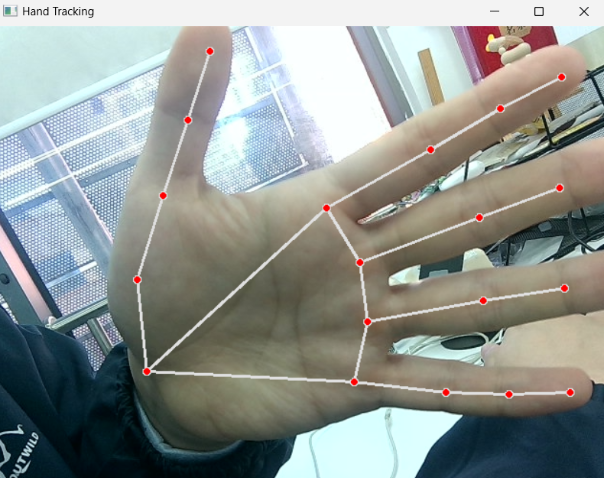

With this, the image capture from the camera is successful. Next, we use MediaPipe for gesture recognition.import mediapipe as mp # Initialize the MediaPipe Hands module mp_hands = mp.solutions.hands hands = mp_hands.Hands() mp_draw = mp.solutions.drawing_utils # Process the image and detect hands result = hands.process(rgb_frame) if result.multi_hand_landmarks: for hand_landmarks in result.multi_hand_landmarks: mp_draw.draw_landmarks(frame, hand_landmarks, mp_hands.HAND_CONNECTIONS)

The output after recognizing a gesture is precise in identifying each joint on the hand and names each joint point. MediaPipe Hands provides 21 key points (landmarks) for the hand, collectively depicting the structure of the hand, including the wrist and the joints of each finger. Taking the thumb as an example, there are four joints, which from bottom to top are CMC, MCP, IP, TIP.cmc: Carpometacarpal Joint mcp:Metacarpophalangeal Joint ip:Interphalangeal Joint tip:tipHaving these landmarks alone is not enough; we need to set a method to recognize specific gestures. For example, if we want to recognize a thumbs-up gesture, we analyze that during a thumbs-up, the tip of the thumb is at the highest point above the entire palm. This makes it much easier. As long as we determine that the tip of the thumb is higher than the tips of all other fingers in the image, then the gesture is identified as a thumbs-up. (Other methods of analysis can also be used.)

Generally, we can obtain three attributes for each joint: X, Y, Z, representing the position of that joint in the image.# Get the attributes of the thumb tip thump_tip = hand_landmarks.landmark[mp.hands.HandLandmark.THUMB_TIP] # Get the height of the thumb tip thump_tip.y # Determine thumbs up gesture def is_thump_up(hand_landmarks): thumb_tip = hand_landmarks.landmark[mp_hands.HandLandmark.THUMB_TIP] index_tip = hand_landmarks.landmark[mp_hands.HandLandmark.INDEX_FINGER_TIP] # Determine which joint is higher. if thumb_tip.y < index_tip.y: return True return FalseIf you want other gestures, you can also set a special identification method based on the characteristics of the hand shape. At this point, gesture recognition is completed.

Robotic Arm Motion Control

Initially, my idea was that when the camera recognizes a gesture, it would send a control command to the robotic arm. Let's start with a simple action, setting the robotic arm to perform a nodding motion.

The pymycobot library offers many functions that are very convenient for controlling the robotic arm.from pymycobot.mycobot import Mycobot import time # connect robot arm mc = Mycobot(port,baud) #Control the movement of the robotic arm using angles mc.send_angles([angles_list],speed) #Control the movement of the robotic arm using coordinates mc.send_coords([coords_list],speed,mode) # Nodding action def ThumpUpAction(self): self.mc.send_angles([0.96, 86.22, -98.26, 10.54, 86.92, -2.37], 60) time.sleep(1.5) for count in range(3): self.mc.send_angles([0.79, 2.46, (-8.17), 4.3, 88.94, 0.26], 70) time.sleep(1) self.mc.send_angles([(-3.6), 30.32, (-45.79), (-46.84), 97.38, 0.35], 70) time.sleep(1) self.mc.send_angles([0.79, 2.46, (-8.17), 4.3, 88.94, 0.26], 70) time.sleep(1) self.mc.send_angles([0.96, 86.22, -98.26, 10.54, 86.92, -2.37], 60)To enhance the readability and modifiability of the overall code, it's beneficial to create a robotic arm class for easy calling and modification.

class RobotArmController: def __init__(self,port): self.mc = MyCobot(port, 115200) self.init_pose = [0.96, 86.22, -98.26, 10.54, 86.92, -2.37] self.coords = [-40, -92.5, 392.7, -92.19, -1.91, -94.14] self.speed = 60 self.mode = 0 def ThumpUpAction(self): ... def OtherAction(self): ...Program Logic Processing

During debugging, some issues arose. When recognizing gestures, continuous recognition meant that if a gesture was recognized 10 times in 1 second, 10 commands would be sent to the robotic arm. This was not what I initially envisioned.

Therefore, logical adjustments were needed. Here's how I addressed it:# Set a 2-second timeframe to confirm the gesture. Only when a thumbs-up gesture is maintained for 2 seconds, the command to control the robotic arm is issued, using a control variable approach. #init #Variable to detect whether gesture exists gesture_detected = False #Variable that determines the timing after the gesture appears gesture_start_time = None # Set the variable 2s after the gesture appears gesture_confirmation_time = 2 # When a specific gesture appears, gesture_start_time begins to count. During this period, continuous checks are made. If 2 seconds have passed, the gesture is confirmed, and then the corresponding robotic arm movement for that gesture is executed. current_time = time.time() if current_gesture: if not gesture_detected: gesture_detected = True gesture_start_time = current_time elif current_time - gesture_start_time > gesture_confirmation_time and not action_triggered: if current_gesture == "thumb_up": robotic arm action()However, this is still not sufficient, as the hand maintaining the gesture for over 2 seconds would continue sending commands to the robotic arm. Here, we need to set a cooldown period to allow sufficient time for the robotic arm to complete its movement.

action_triggered = False cooldown_start_time = None cooldown_period = 2 # process gesture current_time = time.time() if current_gesture: if not gesture_detected: gesture_detected = True gesture_start_time = current_time elif current_time - gesture_start_time > gesture_confirmation_time and not action_triggered: #Perform corresponding actions based on gestures if current_gesture == "thumb_up": print('good good') mc.thum_up() elif current_gesture == "palm_open": print('forward') mc.increment_x_and_send() # You can add more gestures and corresponding action judgments action_triggered = True cooldown_start_time = current_time else: gesture_detected = False gesture_start_time = None if action_triggered and current_time - cooldown_start_time > cooldown_period: print('can continue') action_triggered = False cooldown_start_time = NoneVideo

https://youtu.be/9vOPKO_IG9M

Summary

This project demonstrates a method of using gesture recognition to control the myCobot 320, creating a new form of human-machine interaction. Although currently only a limited number of gestures and corresponding robotic arm movements have been implemented, it lays the groundwork for broader applications of robotic arms in the future. The innovative attempt to combine gestures with robotic arm control has not only improved my programming skills but also enhanced my problem-solving abilities, providing valuable experience for future related projects.