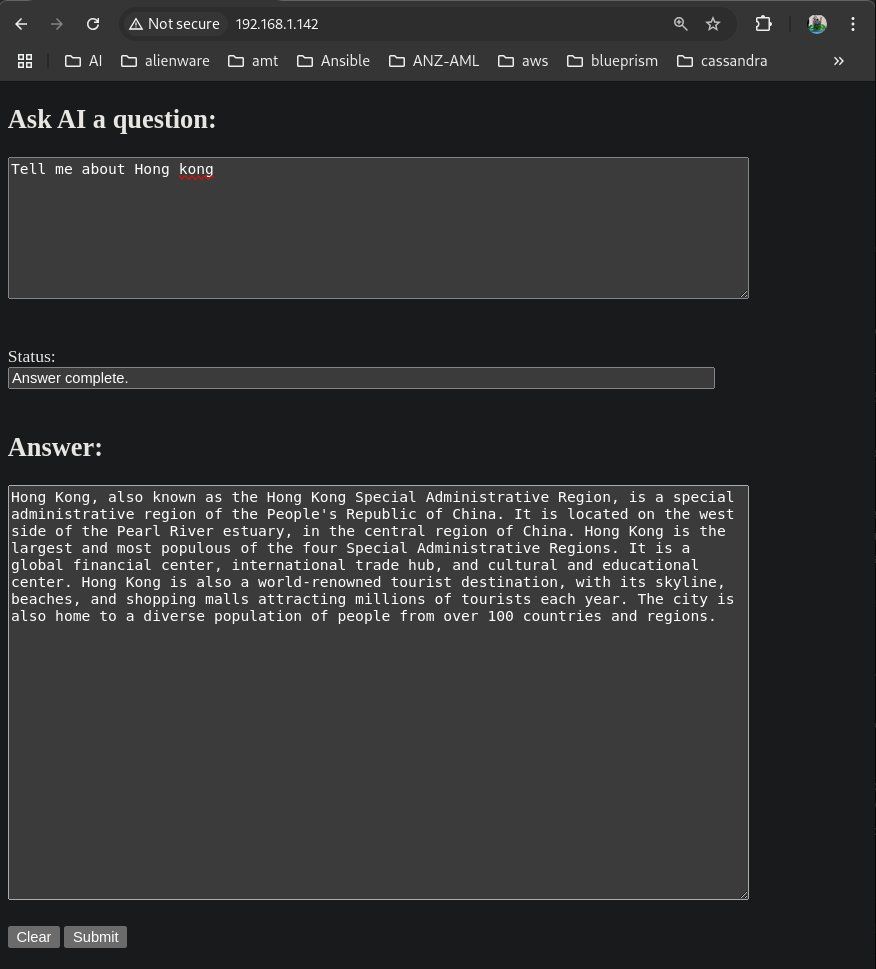

A Web UI for the LLM Module Kit Voice Assistant

-

The Voice Assistant Arduino example allows you to interact with the LLM Module Kit using only speech:

- It uses KeyWord Spotting (kws) to detect a spoken Wake word

- Once detected, it triggers the Automatic Speech Recognition (asr) module to convert your question for the LLM from speech to text

- It then submits the converted text as input for LLM inference

- And outputs the inference results as speech using the Text To Speech (tts) module.

A web UI complements the the Voice Assistant in the following use cases:

- for a long answer to a question, it takes the Voice Assistant a long time to go through it using speech. It will be much quicker if the answer is displayed as text but not on the small screen of the M5Stack Core processor

- I want to copy all or part of the answer and use it somewhere else

- the web UI and voice assistant can be used concurrently

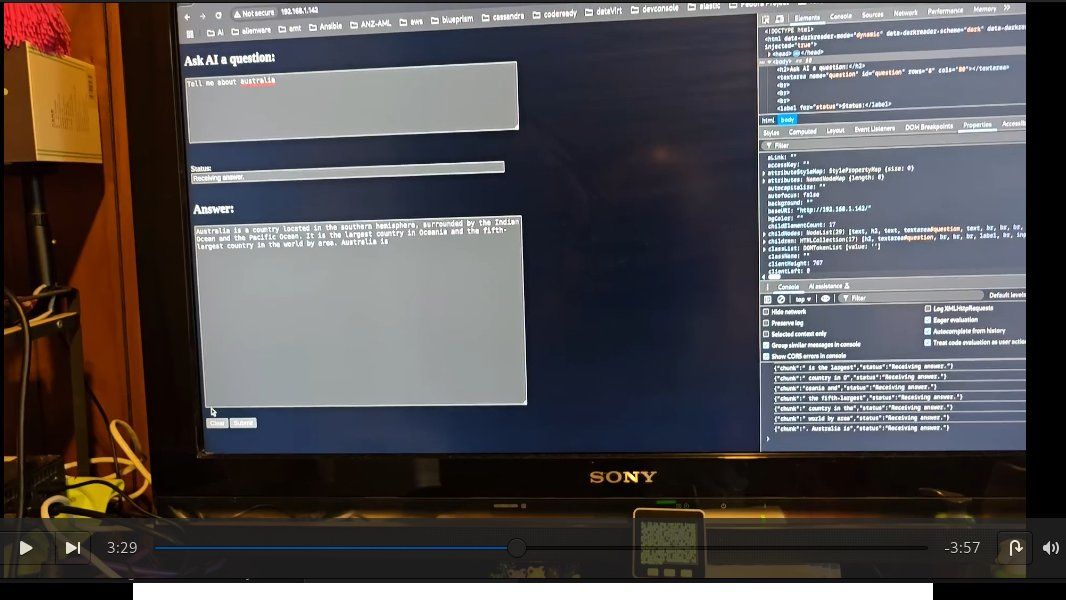

My Youtube video shows the web and voice UI working independently and concurrently.

Web UI by itseld

Web and Voice UI Concurrently

The source code can be found at Github.

Information on the M5Stack LLM Module Kit can be found here.

And info on the M5Stack Gray here .

Enjoy!