Building AIRobot Leveraging ChatGPT for Smarter Robots myCobot 280 M5Stack-Basic

-

Introduction

Since the release of ChatGPT by OpenAI, the world has been rapidly moving towards a trend of integrating AI technology more broadly into robotic devices. Mechanical arms, as an important part of automation and intelligent technology, are increasingly being applied in fields such as manufacturing, healthcare, and the service industry. With the advancement of AI technology, mechanical arms are not only capable of performing complex operational tasks but also capable of more intuitive interactions through natural language processing technology, greatly enhancing flexibility and user-friendliness.

For example, a Microsoft AI research center is studying how to control robotic devices using natural language. Therefore, I am interested in undertaking a similar project, which allows users to control mechanical arms using natural language. This can significantly lower the barrier to robot programming, enabling non-professionals to easily operate and experiment.

paper link:https://www.microsoft.com/en-us/research/uploads/prod/2023/02/ChatGPT___Robotics.pdf

This project is divided into two parts, this article primarily discusses the design and construction process of the entire artificial intelligence system. The next article will address the difficulties encountered during the development of the project, how to solve them, and the potential expandable functionalities of this project.Project Background and Motivation

Imagine one day, if you could command a mechanical arm to "help me tidy up the desk and throw the trash into the trash bin," and the mechanical arm starts to obey the command, cleaning up the trash on your desk. What a joyous thing that would be.

Therefore, for the preparation work, we need a small mechanical arm (mainly because large mechanical arms are too expensive), a computer capable of accessing the internet, and a passionate heart! This project is primarily inspired by Microsoft's research that transformed the use of robots.

Technical Overview

The project is compiled in a Python environment. Let’s introduce some of the software technologies that this project will utilize:

ChatGPT: (The core technology crucial to the entire project)

https://openai.com/chatgpt

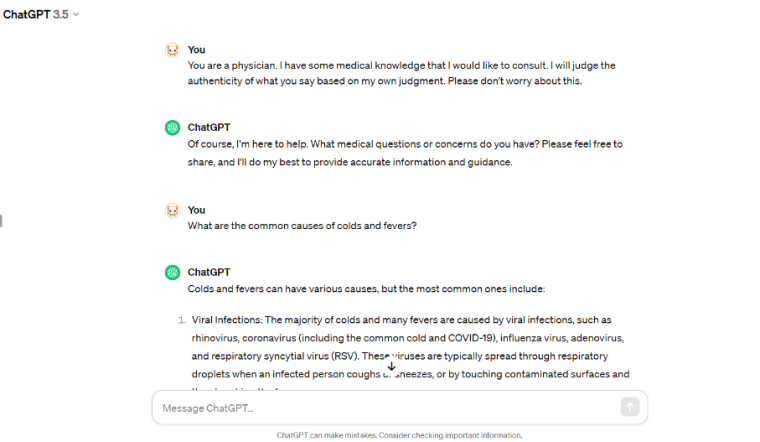

ChatGPT is an artificial intelligence technology based on the GPT (Generative Pre-trained Transformer) model architecture. GPT is a deep learning-based natural language processing model that achieves language understanding and generation tasks through large-scale unsupervised pre-training and supervised fine-tuning. In other words, you can think of it as chatting with a person with vast knowledge. You can preset some roles for it, such as "You are a doctor," and then you can discuss medical knowledge with it. However, please note that not all generated conversations are correct and require self-judgment.

Speech Recognition: (An essential module for processing natural language)

We use a speech recognition service by Google, Speech-to-text, which allows developers to convert speech into text. It also supports multiple languages and dialects, including but not limited to English, Spanish, French, German, Chinese, etc., meeting the needs of global users.

You can try it online:https://cloud.google.com/speech-to-text?hl=en#featurespymycobot: (Control module for the mycobot 280 mechanical arm)

Github:https://github.com/elephantrobotics/pymycobot

pymycobot is a control module developed by Elephant Robotics specifically for the my series product mechanical arms. The development of this module significantly lowers the barrier to programming and controlling mechanical arms. pymycobot provides a multitude of control interfaces for the mechanical arm, such as joint control, coordinate control, and control of the mechanical arm's gripper, etc., which is quite friendly for beginners in mechanical arm programming.These technologies collaborate to achieve the functionality of controlling a mechanical arm through natural language.

Next, let's introduce the hardware device:

mycobot 280 M5Stack

The mycobot 280 M5Stack is a collaborative robot with 6 degrees of freedom, developed in collaboration between Elephant Robotics and M5Stack. It features a compact and exquisite design, with an all-encompassing body that uses high-precision servo motors without any external cables. The mycobot weighs only 850g, and the mechanical arm's end can carry a maximum load of 250g. It has a maximum working radius of 280mm, and its repeatability in positioning accuracy can achieve an error margin within 0.5mm.

Design Concept and Implementation Process:

You might want to take a look at a recent video published by OpenAI, where a person chats with a robot that processes natural language and generates corresponding actions.

https://www.youtube.com/watch?v=Sq1QZB5baNw&pp=ygUVY2hhdGdwdCBjb250cm9sIHJvYm90

There are also some other mechanical arms that have integrated similar scenarios.

https://www.youtube.com/watch?v=IGsYgSdrT4Y

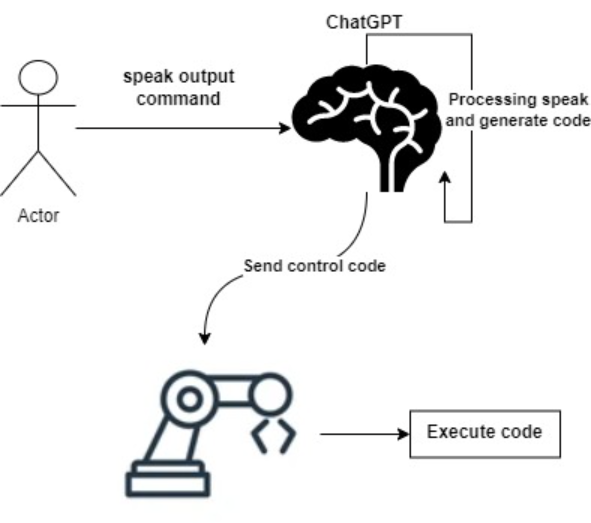

What I aim to do is create a smaller version of this! It involves communicating with a mechanical arm through natural language, after which the mechanical arm executes the corresponding instructions.

Next, I will explain the process of the project.Speech-to-Text Functionality:

Why use the speech-to-text functionality? Those who have used ChatGPT know about its built-in voice chat feature. However, to integrate it with PCs and mechanical arms, we cannot use ChatGPT's web version but need to implement it locally on a computer using ChatGPT's API interface.

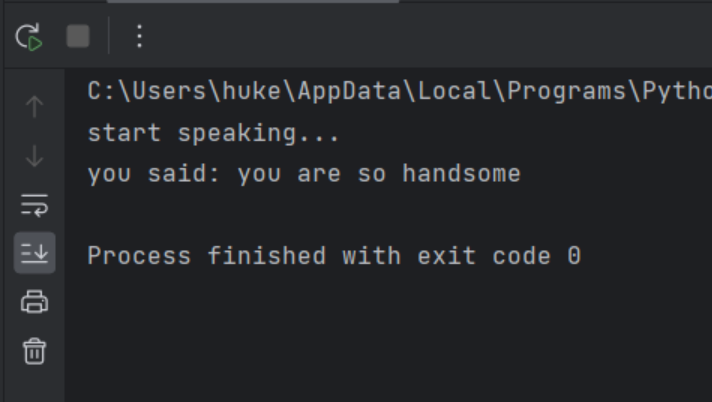

The ChatGPT API can only receive inputs in "text" form, so speech-to-text can convert our speech into text and input it into the computer.def speech_to_text(): recognizer = sr.Recognizer() with sr.Microphone() as source: print("start speaking...") audio = recognizer.listen(source) try: # text = recognizer.recognize_google(audio, language='zh-CN') text = recognizer.recognize_google(audio, language='en-US') print("you said: " + text) return text except sr.UnknownValueError: print("Google Speech Recognition could not understand audio") return None except sr.RequestError as e: print("Could not request results from Google Speech Recognition service; {0}".format(e)) return None

Calling the ChatGPT API & Pre-training:

Once the text form of the speech is obtained, we can call the API to chat with ChatGPT locally. Below is the method provided by OpenAI for calling the ChatGPT API.

def generate_control_code(prompt): openai.api_key = '' prompt = f"{pre_training}The command the user wants to execute is:'{prompt}'." try: response = openai.Completion.create( engine="gpt-3.5-turbo", prompt=prompt, temperature=0.5, max_tokens=100, top_p=1.0, frequency_penalty=0.0, presence_penalty=0.0 ) code = response.choices[0].text.strip() return code except Exception as e: print(f"error: {e}") return ""You need to apply for your own API_KEY from the official website (which requires payment).

Pay attention to the prompt; this will be the pre-training that needs to be mentioned.prompt = f"{pre_training}The command the user wants to execute is:'{prompt}'."To get accurate responses, you need to let ChatGPT know in advance what it needs to do and what you want it to do according to our ideas. We will first test it with the web version because setting up the API is relatively complex.

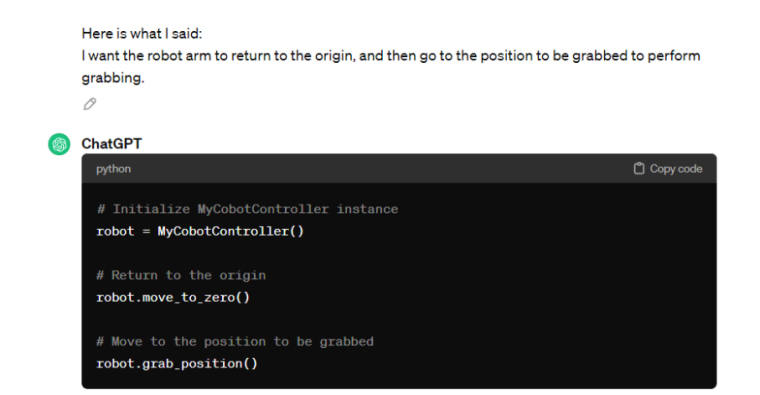

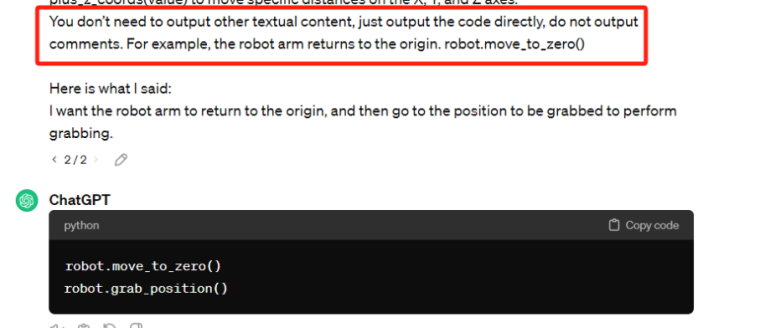

Below is my prompt (specific to this project); I only want it to output the code for the mechanical arm to execute, so this is what I did.Generate Python code that matches the following requirements: Use an instance of the MyCobotController class robot to perform a specific action. The instance already contains methods such as move_to_zero() to return to the initial position, grab_position() to move to the grab position, and plus_x_coords(value), plus_y_coords(value), plus_z_coords(value) to move specific distances on the X, Y, and Z axes. You don’t need to output other textual content, just output the code directly, for example, the robot arm returns to the origin. robot.move_to_zero() Here is what I said: I want the robot arm to return to the origin, and then go to the position to be grabbed to perform grabbing.

Here you can see that it successfully meets my basic requirements, but it outputs the code with comments, which will affect our results later. So, modifications are still needed (to output only the code without comments).

Building a New Mechanical Arm API

Why construct a new API when pymycobot already provides one? Indeed, pymycobot offers a comprehensive and extensive API. However, if the voice command given is complex, ChatGPT might generate more complex code, which could lead to errors. I built a new API for the mechanical arm based on some current testing requirements I wanted to explore.

class MyCobotController: def __init__(self, port, baud): self.mc = MyCobot(port, baud) self.speed = 80 self.mode =0 self.coords = [] def grab_position(self): # self.mc.send_angles([4.83, 13.97, (-99.31), (-1.75), 4.39, (-0.26)], 80) self.mc.send_coords([149.2, (-48.3), 201.7, (-176.98), 4.55, (-84.66)], 80, 0) time.sleep(2) def move_to_zero(self): self.mc.send_angles([0,0,0,0,0,0],70) time.sleep(2) def gripper_open(self): self.mc.set_gripper_state(0,80,1) time.sleep(2)The goal is to quickly set up the entire project and then enrich its content later on. There's a reason for this approach. For instance, if you want the mechanical arm to move to a point and grab something, using pymycobot's method might look something like this:

robot.send_angles([0,0,0,0,0,0],80) time.sleep(2) #open gripper robot.set_grippr_value(1,80,1) time.sleep(1) #clos grippr robot.set_grippr_value(0,80,1) time.sleep(1)In such cases, you would need to output many lines of code, which could lead to errors in more complex situations. By rebuilding a method, we only need to call one method to execute the action, solving the problem with just two lines of code.

class Newmycobot(): def grab_action(self): self.send_angles([0,0,0,0,0,0],80) time.sleep(2) #open gripper self.set_grippr_value(1,80,1) time.sleep(1) #clos grippr self.set_grippr_value(0,80,1) time.sleep(1) robot = Newmycobot() robot.grab_action()5. Preliminary Results and Demonstration

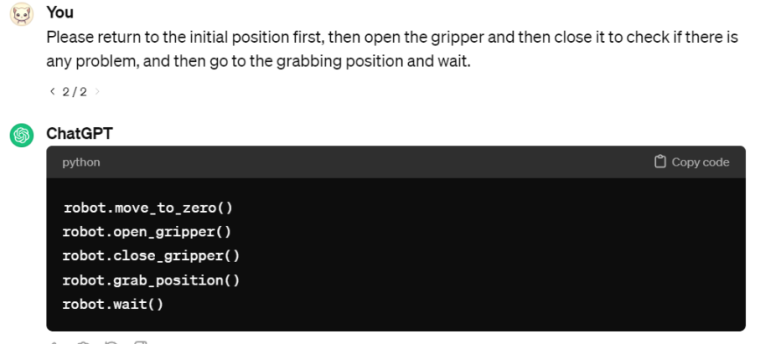

Let's start with a quick debugging session using the web version of ChatGPT to put it into practice.

Copy the generated code and run it.

Copy the generated code and run it.

You can see that the simple test is successful.6. Summary

This record ends here, and the project is not yet completed. In the near future, I will continue to refine this project. In the next article, I will complete the entire project and share how some of the problems that arose during the development process were solved. If you like this article, feel free to leave your thoughts in the comments below.

-

As the control core of the embedded robotic arm, M5stack-basic is very useful and helps a lot!